【NVIDIA GTX1080 & 1070FE评测:FinFET世代的开端】NVIDIA GeForce GTX 1080 & GTX 1070 Founders Editions Review: Kicking Off the FinFET Generation

source:http://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review

先挖个坑,看得懂的就先看,看不懂的看图吧…..

因为1060和480的Review周五会出,优先翻译这俩

本篇有时间了会一点点翻译的…太长了?

在1080发布两个月、1070发布一个月后、Anandtech终于发布了这篇难产的评测。

与大多数媒体稍带浮躁的Day One Review不同,Anandtech更倾向于深入探索,更有研究的价值。

It has taken about 2 years longer than we’d normally see, but the next full generation of GPUs are finally upon us. Powered by FinFET based nodes at TSMC and GlobalFoundries, both NVIDIA and AMD have released new GPUs with new architectures built on new manufacturing nodes. AMD and NVIDIA did an amazing job making the best of 28nm over the 4 year stretch, but now at long last true renewal is at hand for the discrete GPU market.

Back in May we took a first look at the first of these cards, NVIDIA’s GeForce GTX 1080 Founders Edition. Launched at $700, it was immediately the flagship for the FinFET generation. Now today, at long (long) last, we will be taking a complete, in-depth look at the GTX 1080 Founders Edition and its sibling the GTX 1070 Founders Edition. Architecture, overclocking, more architecture, new memory technologies, new features, and of course copious benchmarks. So let’s get started on this belated look at the latest generation of GPUs and video cards from NVIDIA.

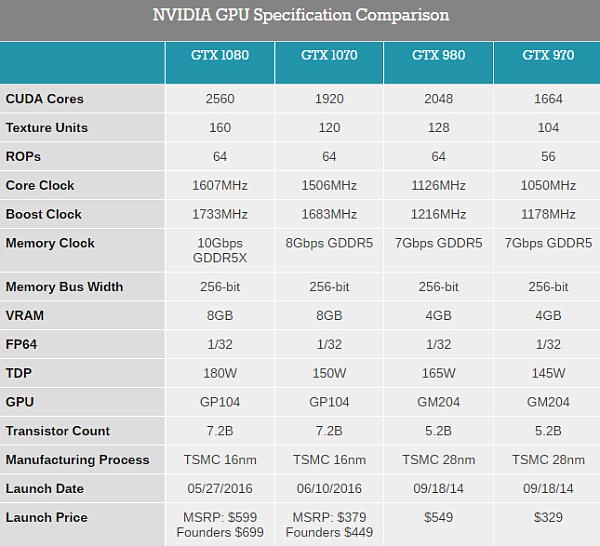

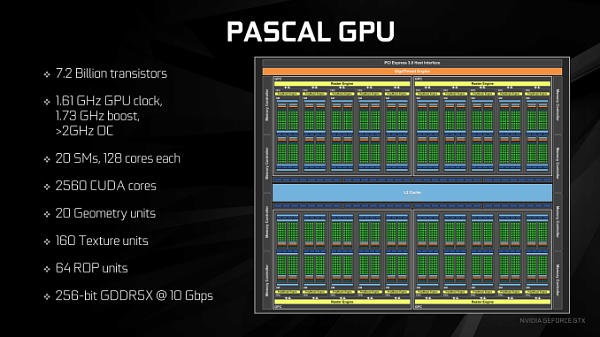

As a quick refresher, here are the specifications for the new cards. At a high level the Pascal architecture (as implemented in GP104) is a mix of old and new; it’s not a revolution, but it’s an important refinement. Maxwell as an architecture was very successful for NVIDIA both at the consumer level and the professional level, and for the consumer iterations of Pascal, NVIDIA has not made any radical changes. The basic throughput of the architecture has not changed ? the ALUs, texture units, ROPs, and caches all perform similar to how they did in GM2xx.

Consequently the performance aspects of consumer Pascal ? we’ll ignore GP100 for the moment ? are pretty easy to understand. NVIDIA’s focus on this generation has been on pouring on the clockspeed to push total compute throughput to 8.9 TFLOPs, and updating their memory subsystem to feed the beast that is GP104.

GeForce GTX 1080

The GeForce GTX 1080 is a fully enabled implementation of GP104. This means 2560 CUDA cores split up over 20 SMs operating at a blistering boost clock of 1733MHz. NVIDIA is positioning GTX 1080 as a full generational update over GTX 980, and thanks to a combination of a slightly wider GPU and a much faster clockspeed, they can generally deliver on this. By the numbers, GTX 1080 offers 78% more raw compute, texturing, and geometry performance, and 43% more ROP throughput. Of course the latter is as much a product of memory bandwidth as it is the ROPs themselves, and for that NVIDIA has some new memory technologies.

Feeding the beast that is GTX 1080 is 8GB of GDDR5X. A new memory standard that extends the effective memory bandwidth of GDDR5, GTX 1080’s GDDR5X runs at 10Gbps, and is attached to a 256-bit memory bus. This gives GTX 1080 a full 320GB/sec of memory bandwidth to play with, 43% more than GTX 980. And as we’ll see in the coming architectural pages, these raw numbers don’t factor in the architectural improvements that allow the Pascal GPUs to stretch their memory bandwidth even further.

Finally, GTX 1080’s TDP is rated at 180W. This is a slight increase from the past generation, where GTX 980 required 165W. Video card specifications are of course a sliding scale ? balancing desired performance with cooling capabilities and power consumption ? and ultimately NVIDIA has opted to eat a slight increase in power consumption to allow GTX 1080 to deliver more performance than it otherwise would.

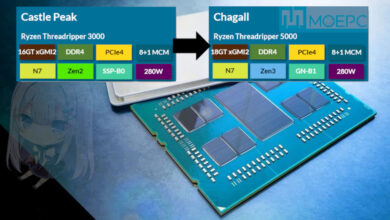

GeForce GTX 1070

Meanwhile below the GTX 1080 we have its lower price and lower performance sibling, the GTX 1070. The standard high-end salvage part, GTX 1070 trades off fewer functional blocks and the lower resulting performance in exchange for a significantly lower price than the GTX 1080. From a hardware perspective, the GTX 1070 utilizes GP104 with 1 of the 4 Graphics Processing Clusters (GPCs) disabled. Relative to GTX 1080, this knocks off around 25% of the shading/texturing/compute performance. However the memory controllers and ROP partitions remain untouched. With this configuration NVIDIA is pitching the GTX 1070 as a full generational update to the GTX 970, and with any luck, the GTX 1070 will be as well accepted as its extremely successful predecessor.

All told then, GTX 1070 provides 1920 CUDA cores split up over 15 SMMs. Those 15 SMMs are in turn running at a base clockspeed of 1506MHz and a boost clock of 1683MHz. This is slightly lower than GTX 1080, but as we’ll see in our full benchmark section, the official clockspeeds have a very little impact; it’s the disabled GPC that really makes the difference. By the numbers, relative to the GTX 970 the GTX 1070 offers 65% more shading, texturing, and geometry throughput, and 63% more ROP throughput. The latter coming as a courtesy of both the higher clockspeeds and the fact that GTX 1070 ships with all 64 ROPs enabled, versus 56 of 64 on GTX 970.

As for memory, GTX 1070 doesn’t get GDDR5X. Instead the card gets 8GB of GDDR5 running at 8Gbps. This delivers a total memory bandwidth of 256GB/sec, and again unlike GTX 970, there is nothing going on with partitions here, so all of that memory and all of that bandwidth is operating in one contiguous partition, giving the GTX 1070 an effective memory bandwidth increase of 31%. GTX 1070 is the first NVIDIA card to ship with 8Gbps GDDR5, a memory speed I once didn’t think possible. NVIDIA and the memory partners are pushing GDDR5 to the limit by doing this, but at this point in time this is the most economical way to boost memory bandwidth without resorting to more exotic and expensive solutions like GDDR5X.

GTX 1070 is rated for a 150W TDP; this is a smaller, 5W increase over its predecessor. Despite the official TDP, it should be noted that NVIDIA is not pitching this card as their 150W champion for systems with a single 6-pin PCIe power cable, and it will require a more powerful 8-pin cable. For systems that need a true sub-150W card, this is where the GTX 1060 will step in. Otherwise NVIDIA is making a very interesting power play here what is now the second most powerful video card on the market does so on just 150W.

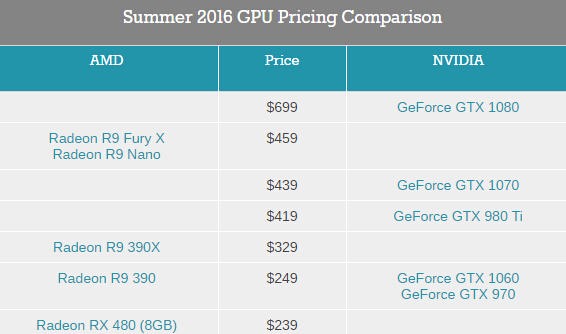

Cards, Pricing, & Availability

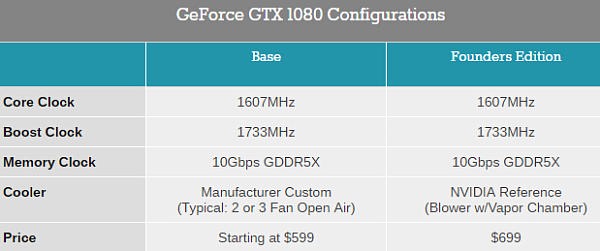

For the GTX 1000 series, NVIDIA has undertaken a significant change in how they handle reference boards and how those boards are priced. What were once reference boards are now being released as the Founders Edition boards. These boards are largely similar to NVIDIA’s last-generation reference boards, built using a standard PCB and NVIDA’s high-end blower cooler, along with some additional cooling upgrades. The Founders Edition cards will, in turn, not be sold at NVIDIA’s general MSRP for each family, but rather they will be sold as premium cards for around $80-$100 more.

As a result we have two prices to talk about. For the GTX 1080, the family MSRP is $599. At the base level this is a slight price increase over the GTX 980, which launched at $549. As the Founders Edition cards are not being sold at this price, it is instead being filled by semi and fully custom cards from NVIDIA’s partners. These custom cards offer a mix of designs, but at the cheapest level (those cards closest to the MSRP) we’re predominantly looking at dual fan open air cooled cards. The rest of the lineup is filled by more advanced cards (including some closed loop liquid coolers) with factory overclocks and other features that are sold at a premium price. The GTX 1080 Founders Edition card, for its part, fits in to this picture at $699, a $100 premium.

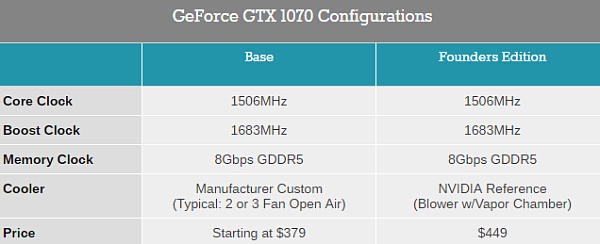

The story then is much the same for the GTX 1070. Its family MSRP is $379, which its Founders Edition counterpart is being sold for $449. At $379 for the family MSRP, this is a $40 price increase over the GTX 970, and I am curious over the long run whether this will significantly impact sales. One of the factors that made GTX 970 such a well-received card was its price, and this takes away from that by a bit. Otherwise, as with the GTX 1080, the partners’ custom cards for the GTX 1070 run the gamut from simple dual fan cards at the cheapest prices, up to premium, factory overclocked cards at the highest prices.

Unfortunately for everyone involved, the plan for pricing and reality haven’t quite agreed with each other. Even now, 2 months after the launch of the GTX 1080, card supplies are slim. There is effectively a shortage of GTX 1080 cards, as while NVIDIA insists they are continuing to ship out a good supply, those cards appear to be getting plucked off of virtual and physical shelves almost as quickly. As of the time this paragraph was written, Newegg only has a single GTX 1080 in stock, a Founders Edition card at $699.

For the last several generations it has been pretty common for the first batch or two of high-end cards to sell out, however to be sold out for 2 months is a lot less common. Other than NVIDIA’s Titan series card, which are a special case due to their prosumer market, I can’t immediately recall the last time an NVIDIA flagship card was this hard to get this late after a launch. For NVIDIA and its partners there are worse problems in the world ? it’s better to have too few cards than too many cards that you can’t sell ? but it certainly puts a damper on things for both the partners and for customers.

Meanwhile the GTX 1070 situation is noticeably better, though still not great. About half of the models that Newegg carries are in stock at any given time. So potential GTX 1070 owners have more options, though if they’re after a specific card they may find themselves waiting.

But the real problem with this shortage is that it has removed any incentive to keep prices close to NVIDIA’s MSRP. GTX 1070 prices start at $429 instead of $379, while GTX 1080 prices start at $649 (and if you actually want a card in stock, that’ll be $699). These are prices that are closer to last generations GTX 980 Ti/980 prices than they are 980/970, and it means that the actual GTX 1000 series price premium is much higher as it stands, at $100+ compared to the last generation. Given that these cards keep selling out, clearly there are enough buyers willing to pay these prices ? it’s the free market in action ? but it means NVIDIA’s MSRPs are for the moment an imaginary number. At this point all that we can do is hope that once the shortage breaks, there will be more intensive competition between the partners and retailers, and prices will fall down to MSRP.

As for the larger competitive landscape, as we’re looking at high-end cards at the start of a new generation, there really isn’t any competition to speak of. The GTX 1000 series sets a new bar for performance, and while last generation cards are being priced to clear out inventories, they aren’t performance competitive with the new cards. Meanwhile stalwart competitor AMD has opted to go after the mainstream market first rather than starting at the high-end. This means that the GTX 1080 and GTX 1070 will not have any competition for at least the next few months, leaving NVIDIA solely in the driver’s seat at the high-end, and in sole possession of the GPU performance crown.

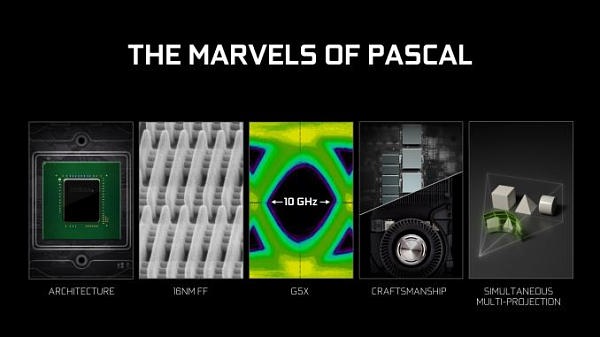

Pascal’s Architecture: What Follows Maxwell

With the launch of a new generation of GPUs we’ll start things off where we always do: the architecture.

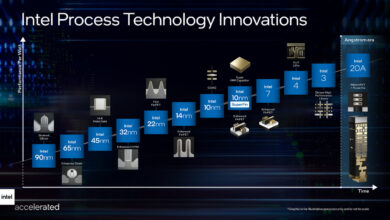

Discrete GPUs occupy an interesting space when it comes to the relationship between architecture and manufacturing processes. Whereas CPUs have architecture and manufacturing process decoupled ? leading to Intel’s aptly named (former) tick-tock design methodology ? GPUs have aligned architectures with manufacturing processes, with a new architecture premiering alongside a new process. Or rather, GPU traditionally did. Maxwell threw a necessary spanner into all of this, and in its own way Pascal follows this break from tradition.

As the follow-up to their Kepler architecture, with Maxwell NVIDIA introduced a significantly altered architecture, one that broke a lot of assumptions Kepler earlier made and in the process vaulted NVIDIA far forward on energy efficiency. What made Maxwell especially important from a development perspective is that it came not on a new manufacturing process, but rather on the same 28nm process used for Kepler two years earlier, and this is something NVIDIA had never done before. With the 20nm planar process proving unsuitable for GPUs and only barely suitable for SoCs ? the leakage from planar transistors this small was just too high ? NVIDIA had to go forward with 28nm for another two years. It would come down to their architecture team to make the best of the situation and come up with a way to bring a generational increase in performance without the traditional process node shrink.

Now in 2016 we finally have new manufacturing nodes with the 14nm/16nm FinFET processes, giving GPU manufacturers a long-awaited (and much needed) opportunity to bring down power consumption and reduce chip size through improved manufacturing technology. The fact that it has taken an extra two years to get here, and what NVIDIA did in the interim with Maxwell, has opened up a lot of questions about what would follow for NVIDIA. The GPU development process is not so binary or straightforward that NVIDIA designed Maxwell solely because they were going to be stuck on the 28nm process ? NVIDIA would have done Maxwell either way ? but it certainly was good timing to have such a major architectural update fall when it did.

So how does NVIDIA follow-up on Maxwell then? The answer comes in Pascal, NVIDIA’s first architecture for the FinFET generation. Designed to be built on TSMC’s 16nm process, Pascal is the latest and the greatest, and like every architecture before it is intended to further push the envelope on GPU performance, and ultimately push the envelope on the true bottleneck for GPU performance, energy efficiency.

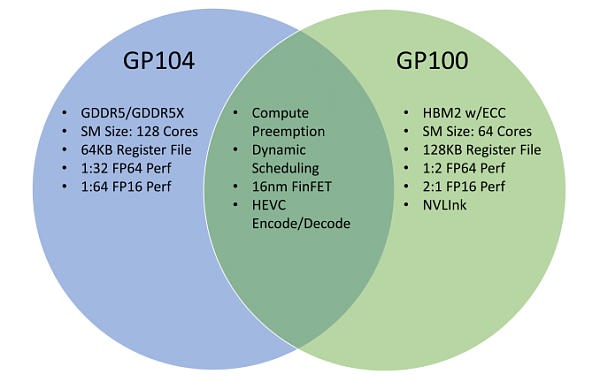

HPC vs. Consumer: Divergence

Pascal is an architecture that I’m not sure has any real parallel on a historical basis. And a big part of that is because to different groups within NVIDIA, Pascal means different things and brings different things, despite the shared architecture. On the one side is the consumer market, which is looking for a faster still successor to what Maxwell delivered in 2014 and 2015. Meanwhile on the high performance compute side, Pascal is the long-awaited update to the Kepler architecture (Maxwell never had an HPC part), combining the lessons of Maxwell with the specific needs of the HPC market.

The result is that there’s an interesting divergence going on between the HPC side and its GP100 GPU, and the consumer side and the GP104 GPU underlying GTX 1080. Even as far back as Fermi there was a distinct line separating HPC-class GPUs (GF100) from consumer/general compute GPUs (GF104), but with Pascal this divergence is wider than ever before. Ultimately the HPC market and GP100 is beyond the scope of this article and I’ll pick it up in detail another time, but because NVIDIA announced GP100 before GP104, it does require a bit of addressing to help sort out what’s going on and what NVIDA’s design goals were with GP104.

Pascal as an overarching architecture contains a number of new features, however not all of those features are present in all SKUs. If you were to draw a Venn diagram of Pascal, what you would find is that the largest collection of features are found in GP100, whereas GP104, like the previous Maxwell architecture before it, is stripped down for speed and efficiency. As a result while GP100 has some notable feature/design elements for HPC ? things such faster FP64 & FP16 performance, ECC, and significantly greater amounts of shared memory and register file capacity per CUDA core ? these elements aren’t present in GP104 (and presumably, future Pascal consumer-focused GPUs).

Ultimately what we’re seeing in this divergence is a greater level of customization between NVIDIA’s HPC and consumer markets. The HPC side of NVIDIA is finally growing up, and it’s growing fast. The long term plan at NVIDIA has been to push GPU technology beyond consumer and professional graphics, and while it has taken years longer than NVIDIA originally wanted, thanks in big part to success in the deep learning market,NVIDIA is finally achieving their goals.

This means that although GP100 is a fully graphics capable GPU, it doesn’t necessarily have to be put into video cards to make sense for NVIDIA to manufacture, and as a result NVIDIA can make it even more compute focused than prior-generation parts like GK110 and GF110. And that in turn means that although this divergence is driven by the needs of the HPC market ? what features need to be added to make a GPU more suitable for HPC use cases ? from the perspective of the consumer market there is a tendency to perceive that consumer parts are falling behind. Especially with how GP100 and GP104’s SMs are differently partitioned.

This is a subject I’ll revisit in much greater detail in the future when we focus on GP100. But for now, especially for the dozen of you who’ve emailed over the past month asking about why the two are so different, the short answer is that the market needs for HPC are different from graphics, and the difference in how GP100 and GP104 are partitioned reflect this. GP100 and GP104 are both unequivocally Pascal, but GP100 gets smaller SM partitions in order to increase the number of registers and the amount of shared memory available per CUDA core. Shared memory and register contention on graphics workloads isn’t nearly as great as with HPC tasks ? pixel shader threads are relatively short and independent from each other ? which means that while the increased ratios benefit HPC workloads, for graphics the gains would be minimal. And the costs to power and die space would, in turn, far outweigh any benefits.

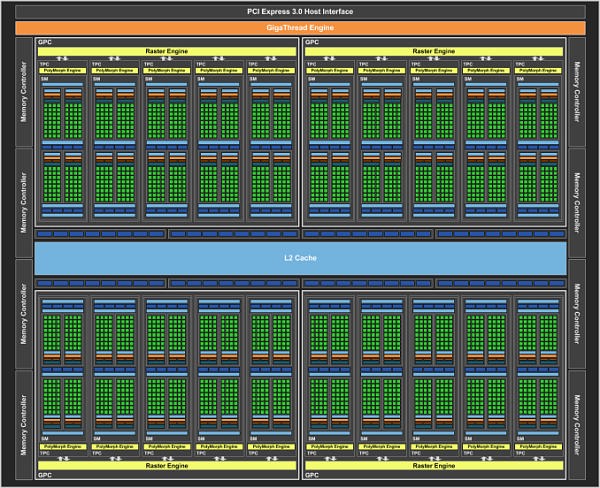

GP104: The Heart of GTX 1080

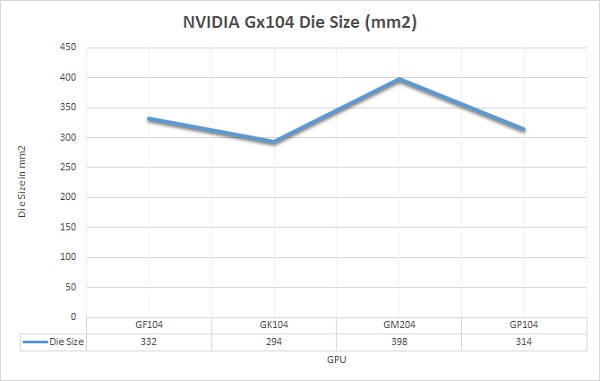

At the heart of the GTX 1080 is the first of the consumer-focused Pascal GPUs, GP104. Though no two GPU generations are ever quite alike, GP104 follows a number of design cues established with the past couple 104 GPUs. Overall 104 GPUs have struck a balance between size and performance, allowing NVIDIA to get a suitably high yielding GPU out at the start of a generation, and to be followed up with larger GPUs later on as yields improve. With the exception of the GTX 780, 104 GPUs been the backbone of NVIDIA’s GTX 70 and 80 parts, and that is once again the case for the Pascal generation.

In terms of die size, GP104 comes in at 314mm2. This is right in NVIDIA’s traditional sweet spot for these designs, slotting in between the 294mm2 GK104 and the 332mm2 GF104. In terms of total transistors we’re looking at 7.2B transistors, up from 3.5B on GK104 and the 5.2B of the more unusual GM204. The significant increase in density comes from the use of TSMC’s 16nm FinFET process, which compared to 28nm combines a full node shrink, something that has been harder and harder to come by as the years have progressed.

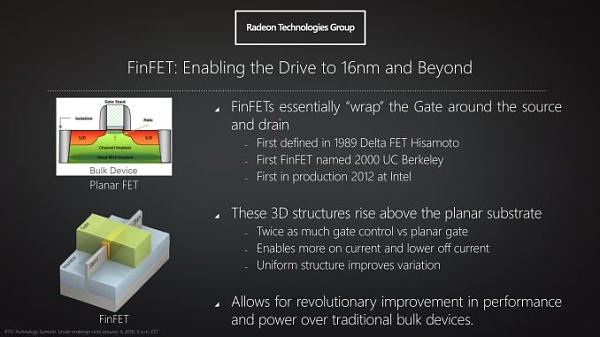

Though the density improvement offered by TSMC’s 16nm process is of great importance to GP104’s overall performance, for once density takes a back seat to the properties of the process itself. I am of course speaking about the FinFET transistors, which are the headlining feature of TSMC’s process.

We’ve covered FinFET technology in depth before, so I won’t completely rehash it here. But in brief, FinFETs are an important development for chip fabrication as processes have gone below 28nm. As traditional, planar transistors have shrunk in feature size ? and ultimately, the number of atoms they’re comprised of ? electrical leakage has increased. With fewer atoms in a transistor, there are equally fewer atoms to control the flow of electrons.

FinFET in turn is a solution to this problem, essentially allowing fabs to turn back the clock on electrical leakage. By building transistors as three-dimensional objects with height as opposed to two-dimensional objects, giving FinFET transistors their characteristic fins in the process, FinFET technology greatly reduces the amount of energy a transistor leaks. In practice what this means is that FinFET technology not only reduces the total amount of energy wasted from leakage, but it also allows transistors to be operated at a much lower voltage, something we’ll see in depth with our analysis of GTX 1080.

FinFETs, or rather the lack thereof, are a big part of why we never saw GPUs built on TSMC’s 20nm process. It was TSMC’s initial belief that they could contain leakage well enough using traditional High-K Metal Gate (HKMG) technology on 20nm, a bet they ultimately lost. At 20nm, planar transistors were just too leaky to use for many applications, which is why ultimately we only saw SoCs on 20nm (and even then they were suboptimal). FinFETs, as it turns out, are absolutely necessary to get good performance out of transistors built on processes below 28nm.

And while it took TSMC some time to get there, now that they have the capability NVIDIA can reap the benefits. Not only can NVIDIA finally build a relatively massive chip like a GPU on a sub-28nm process, but thanks to the various beneficial properties of FinFETs, it allows them to take their designs in a different direction than what they could do on 28nm.

GP104’s Architecture

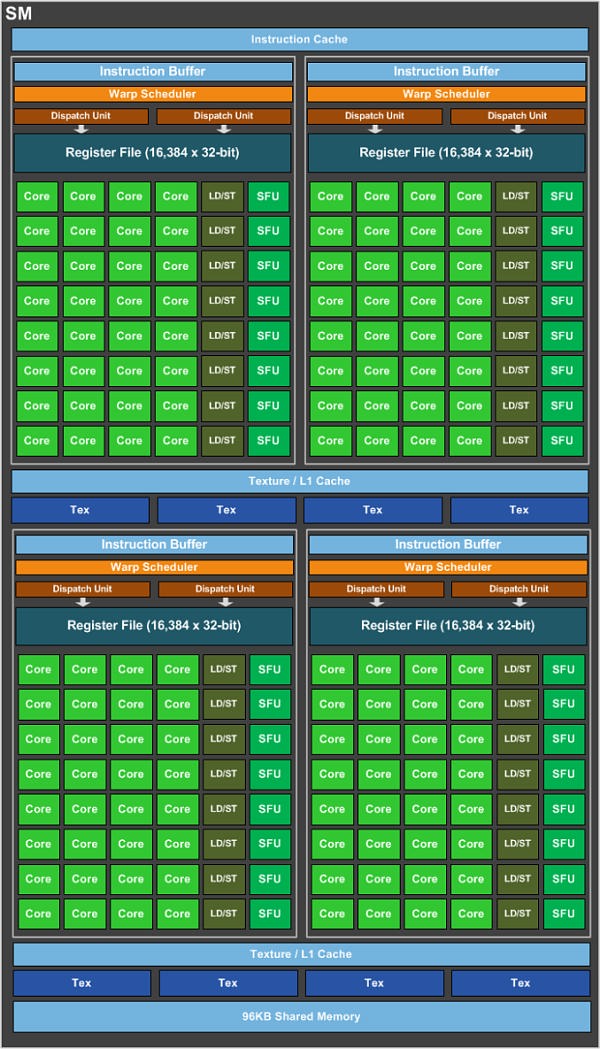

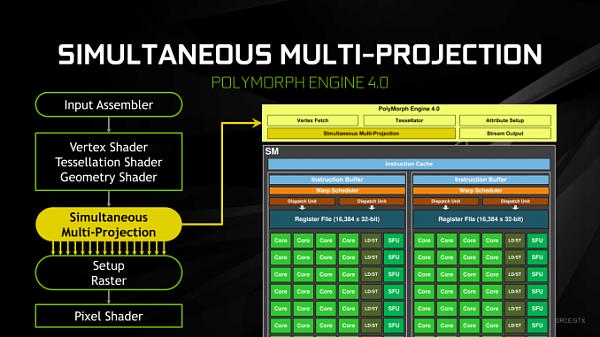

Looking at an architecture diagram for GP104, Pascal ends up looking a lot like Maxwell, and this is not by chance. After making more radical changes to their architecture with Maxwell, for Pascal NVIDIA is taking a bit of a breather. Which is not to say that Pascal is Maxwell on 16nm ? this is very much a major feature update ? but when it comes to discussing the core SM architecture itself, there is significant common ground with Maxwell.

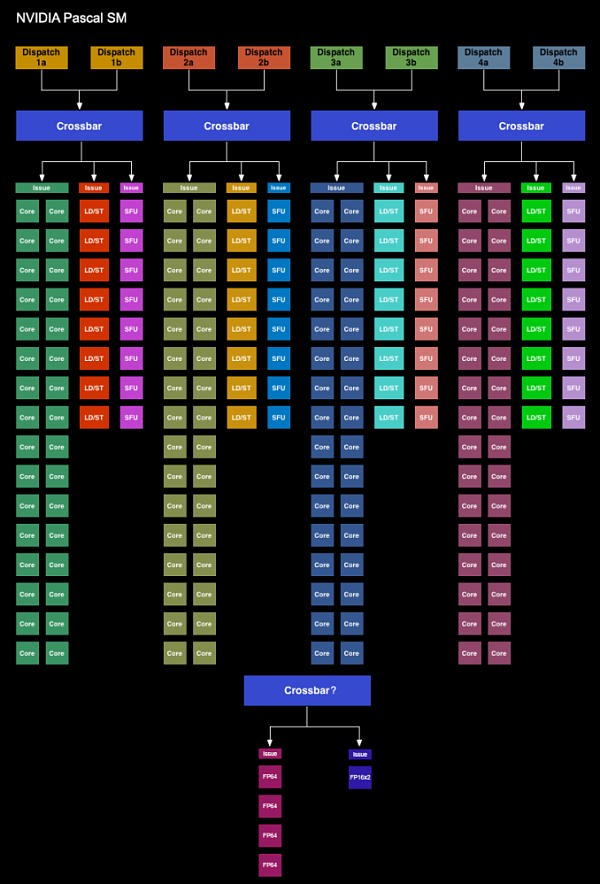

We’ll start with the GP104 SM. Simply named the SM for this generation ? NVIDIA has ditched the generational suffix due to the potential for confusion with the used-elsewhere SMP ? the GP104 SM is very similar to the Maxwell SM. We’re still looking at a single SM partially sub-divided into four pieces, each containing a single warp scheduler that’s responsible for feeding 32 CUDA cores, 8 load/store units, and 8 Special Function Units, backed by a 64KB register file. There are two dispatch ports per warp schedule, so when an instruction stream allows it, a warp scheduler can extract a limited amount of ILP with an instruction stream by issuing a second instruction to an unused resource.

Meanwhile shared between every pair of sub-partitions is 4 texture units and the combined L1/texture cache, again unchanged from Maxwell. Finally, we have the resources shared throughout the whole SM: the 96KB shared memory, the instruction cache, and not pictured on NVIDIA’s diagrams, the 4 FP64 CUDA cores and 1 FP16x2 CUDA core.

Overall then at the diagram level the GP104 SM looks almost identical to the Maxwell SM, but with one exception: the PolyMorph Engine. Although the distinction is largely arbitrary for GP104, the PolyMorph Engine has been moved up a level; it’s no longer part of the SM, but rather part of the newly re-introduced TPC, which itself sits between the GPC and the SM.

The TPC exists because although GP104 still has a 1:1 ratio between PolyMorph Engines and SMs, the Pascal architecture itself allows for different SM configurations, which is in turn used on GP100 to allow it to have multiple smaller SMs of 64 CUDA Cores. For GP100 the TPC allows for multiple SMs to share a PolyMorph Engine, but for GP104 there’s no sharing involved. To that end the TPC as an organizational unit technically exists across all Pascal parts, but it has no real significance for GP104. In fact it doesn’t even have a real name; NVIDIA reused the acronym from earlier DX10 architectures, where the TPC was the name assigned to the Texture Processor Cluster.

Looking at the bigger picture of the complete GP104 GPU, the similarities continue between GP104 and GM204. GP104’s SMs are clustered five-a-piece inside of the GPC, with each cluster sharing a single Raster Engine. Overall there are 4 such GPCs, giving us 20 SMs altogether. Compared to GM204 then, we’re looking at the same number of GPCs, with each GPC having gained 1 SM.

Things get more interesting when we look at the back end of the rendering/execution pipeline, which is comprised of the L2 cache, ROPs, and memory controllers. The ROP/L2 count has not changed relative to GM204 ? we still have 64 ROPs paired up with a total of 2MB of L2 cache ? however the memory controller count has. And with it the logical configuration of the ROP/L2 blocks have changed as well.

Whereas GM204 had 4 64bit GDDR5 memory controllers, each connected to 2 or 4 memory chips, GP104 breaks that down further to 8 32bit GDDR5X memory controllers, each of which is connected to 1 memory chip on GTX 1080. I’ll go into greater detail on GDDR5X a bit later, but the significance of this backend organizational change has to do with the introduction of GDDR5X. Because GDDR5X reads and writes data in 64B amounts (versus 32B amounts on GDDR5), NVIDIA has reorganized the memory controllers to ensure that each memory controller still operates on the same amount of data. With GDDR5 they teamed up two GDDR5 channels to get 64B operations, whereas with GDDR5X this can be accomplished with a single memory channel.

This in turn is where the ROP reorganization comes from. As there’s a 1:1 relationship between ROP partitions and memory controllers, the 64 ROPs are now broken up into 8 partitions for GP104, as opposed to 4 partitions on GM204. There are some performance tradeoffs that come from having more ROP partitions, but to the best of my knowledge these should not be significant.

Meanwhile the new GDDR5X memory controllers are also backwards compatible with traditional GDDR5, which in turn is used to drive the GTX 1070 with its 8Gbps GDDR5. The difference in operation between GDDR5 and GDDR5X does make the ROP situation a bit trickier overall for NVIDIA’s architects ? now they need to be able to handle two different memory access patterns ? though for NVIDIA this isn’t a wholly new problem. Previous generation architectures have supported both GDDR5 and DDR3, the two of which have their own differences in memory access patterns.

In a by-the-numbers comparison then, Pascal does not bring any notable changes in throughput relative to Maxwell. CUDA cores, texture units, PolyMorph Engines, Raster Engines, and ROPs all have identical theoretical throughput-per-clock as compared to Maxwell. So on a clock-for-clock, unit-for-unit basis, Pascal is not any faster on paper. And while NVIDIA does not disclose the size/speed of most of their internal datapaths, so far I haven’t seen anything to suggest that these have radically changed. This continuity means that outside of its new features, GP104 behaves a lot like GM204. Though it should be noted that real world efficiency isn’t quite as cut and dry, as various factors such as the increased SM count and changes in memory technology can greatly influence this.

FP16 Throughput on GP104: Good for Compatibility (and Not Much Else)

Speaking of architectural details, I know that the question of FP16 (half precision) compute performance has been of significant interest. FP16 performance has been a focus area for NVIDIA for both their server-side and client-side deep learning efforts, leading to the company turning FP16 performance into a feature in and of itself.

Starting with the Tesla X1 ? and then carried forward for Pascal ? NVIDIA added native FP16 compute support to their architectures. Prior to these parts, any use of FP16 data would require that it be promoted to FP32 for both computational and storage purposes, which meant that using FP16 did not offer any meaningful improvement in performance or storage needs. In practice this meant that if a developer only needed the precision offered by FP16 compute (and deep learning is quickly becoming the textbook example here), that at an architectural level power was being wasted computing that extra precision.

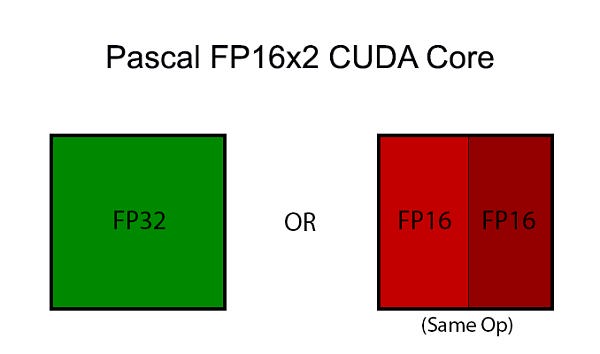

Pascal, in turn, brings with it native support for FP16 compute for both storage and compute. On the storage side, Pascal supports FP16 datatypes, with relative to the previous use of FP32 means that FP16 values take up less space at every level of the memory hierarchy (registers, cache, and DRAM). On the compute side, Pascal introduces a new type of FP32 CUDA core that supports a form of FP16 execution where two FP16 operations are run through the CUDA core at once (vec2). This core, which for clarity I’m going to call an FP16x2 core, allows the GPU to process 1 FP32 or 2 FP16 operations per clock cycle, essentially doubling FP16 performance relative to an identically configured Maxwell or Kepler GPU.

Now there are several special cases here due to the use of vec2 ? packing together operations is not the same as having native FP16 CUDA cores ? but in a nutshell NVIDIA can pack together FP16 operations as long as they’re the same operation, e.g. both FP16s are undergoing addition, multiplication, etc. Fused multiply-add (FMA/MADD) is also a supported operation here, which is important for how frequently it is used and is necessary to extract the maximum throughput out of the CUDA cores.

Low precision operations are in turn seen by NVIDIA as one of the keys into further growing their increasingly important datacenter market, as deep learning and certain other tasks are themselves rapidly growing fields. Pascal isn’t just faster than Maxwell overall, but when it comes to FP16 operations on the FP16x2 core, Pascal is a lot faster, with theoretical throughput over similar Maxwell GPUs increasing by over three-fold thanks to the combination of overall speed improvements and double speed FP16 execution.

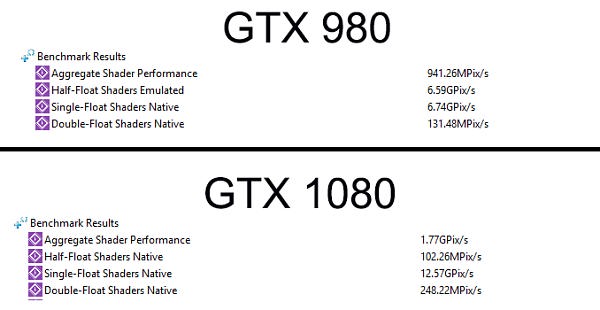

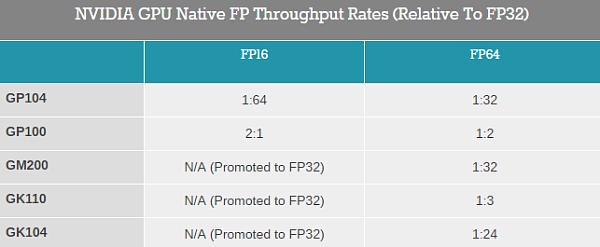

GeForce GTX 1080, on the other hand, is not faster at FP16. In fact it’s downright slow. For their consumer cards, NVIDIA has severely limited FP16 CUDA performance. GTX 1080’s FP16 instruction rate is 1/128th its FP32 instruction rate, or after you factor in vec2 packing, the resulting theoretical performance (in FLOPs) is 1/64th the FP32 rate, or about 138 GFLOPs.

After initially testing FP16 performance with SiSoft Sandra ? one of a handful of programs with an FP16 benchmark built against CUDA 7.5 ? I reached out to NVIDIA to confirm whether my results were correct, and if they had any further explanation for what I was seeing. NVIDIA was able to confirm my findings, and furthermore that the FP16 instruction rate and throughput rates were different, confirming in a roundabout manner that GTX 1080 was using vec2 packing for FP16.

As it turns out, when it comes to FP16 NVIDIA has made another significant divergence between the HPC-focused GP100, and the consumer-focused GP104. On GP100, these FP16x2 cores are used throughout the GPU as both the GPU’s primarily FP32 core and primary FP16 core. However on GP104, NVIDIA has retained the old FP32 cores. The FP32 core count as we know it is for these pure FP32 cores. What isn’t seen in NVIDIA’s published core counts is that the company has built in the FP16x2 cores separately.

To get right to the point then, each SM on GP104 only contains a single FP16x2 core. This core is in turn only used for executing native FP16 code (i.e. CUDA code). It’s not used for FP32, and it’s not used for FP16 on APIs that can’t access the FP16x2 cores (and as such promote FP16 ops to FP32). The lack of a significant number of FP16x2 cores is why GP104’s FP16 CUDA performance is so low as listed above. There is only 1 FP16x2 core for every 128 FP32 cores.

Limiting the performance of compute-centric features in consumer parts is nothing new for NVIDIA. FP64 has been treated as a Tesla feature since the beginning, and consumer parts have either shipped with a very small number of FP64 CUDA cores for binary compatibility purposes, or when a GeForce card uses an HPC-class GPU, FP64 performance is artificially restricted. This allows NVIDIA to include a feature for software development purposes while enforcing strict market segmentation between the GeForce and Tesla products. However in the case of FP64, performance has never been slower than 1/32, whereas with FP16 we’re looking at a much slower 1/128 instruction rate. Either way, the end result is that like GP104’s FP64 support, GP104’s FP16 support is almost exclusively for CUDA development compatibility and debugging purposes, not for performant consumer use.

As for why NVIDIA would want to make FP16 performance so slow on Pascal GeForce parts, I strongly suspect that the Maxwell 2 based GTX Titan X sold too well with compute users over the past 12 months, and that this is NVIDIA’s reaction to that event. GTX Titan X’s FP16 and FP32 performance was (per-clock) identical its Tesla equivalent, the Tesla M40, and furthermore both cards shipped with 12GB of VRAM. This meant that other than Tesla-specific features such as drivers and support, there was little separating the two cards.

The Titan series has always straddled the line between professional compute and consumer graphics users, however if it veers too far into the former then it puts Tesla sales at risk. Case in point: at this year’s NVIDIA GPU Technology Conference, I was approached twice by product vendors who were looking for more Titan X cards for their compute products, as at that time the Titan X was in short supply. Suffice it to say, Titan X has been very popular with the compute crowd.

In any case, limiting the FP16 instruction rate on GeForce products is an easy way to ensure that these products don’t compete with the higher margin Tesla business. NVIDIA has only announced one Tesla so far ? the high-end P100 ? but even that sold out almost immediately. For now I suspect that NVIDIA wants to ensure that P100 and M40 sales are not impacted by the new GeForce cards.

Overall I’m not surprised that NVIDIA limited the FP16 performance of the GTX 1080 ? albeit by a new record ? as they clearly consider faster FP16 performance a feature that can be monetized under Tesla. However I have to admit that I am surprised that NVIDIA limited it in hardware on GP104 in this fashion, similar to how they limit FP64 performance, rather than using FP16x2 cores throughout the GPU and using software cap. The difference is that had NVIDIA implemented a complete fast FP16 path in GP104 and merely turned it off for GeForce, then they could have used GP104 for high performance (and high margin) FP16 Tesla cards. However by building GP104 from the get-go with a single FP16x2 unit per SM, they have closed the door on that option.

Where things may get especially interesting when it comes to FP16 performance is in smaller-still chips such as GP106. NVIDIA admittedly never used GM204 as a high performance compute part ? it was used in the virtualization focused Tesla M6 and M60 cards ? but NVIDIA did produce a small form factor compute and deep learning focused card with the GM206 based Tesla M4. I fully expect that NVIDIA will want a successor to this card, which will be hard to do if only GP100 has fast FP16 support. At the same time NVIDIA has still yet to disclose the dGPUs used with the DRIVE PX 2 module, where again fast FP16 support is useful for neural network inference. It may very well be that GP104’s low hardware FP16 performance is something that is not shared by the rest of the Pascal consumer GPU family.

Designing GP104: Running Up the Clocks

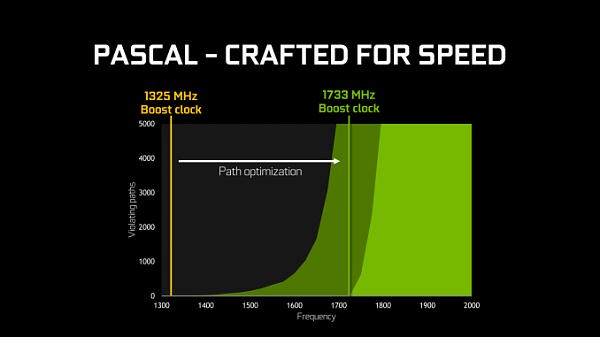

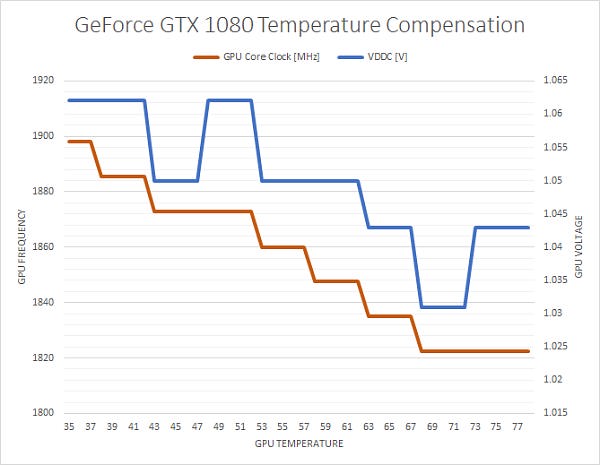

So if GP104’s per-unit throughput is identical to GM204, and the SM count has only been increased from 2048 to 2560 (25%), then what makes GTX 1080 60-70% faster than GTX 980? The answer there is that instead of vastly increasing the number of functional units for GP104 or increasing per-unit throughput, NVIDIA has instead opted to significantly raise the GPU clockspeed. And this in turn goes back to the earlier discussion on TSMC’s 16nm FinFET process.

With every advancement in fab technology, chip designers have been able to increase their clockspeeds thanks to the basic physics at play. However because TSMC’s 16nm node adds FinFETs for the first time, it’s extra special. What’s happening here is a confluence of multiple factors, but at the most basic level the introduction of FinFETs means that the entire voltage/frequency curve gets shifted. The reduced leakage and overall “stronger” FinFET transistors can run at higher clockspeeds at lower voltages, allowing for higher overall clockspeeds at the same (or similar) power consumption. We see this effect to some degree with every node shift, but it’s especially potent when making the shift from planar to FinFET, as has been the case for the jump from 28nm to 16nm.

Given the already significant one-off benefits of such a large jump in the voltage/frequency curve, for Pascal NVIDIA has decided to fully embrace the idea and run up the clocks as much as is reasonably possible. At an architectural level this meant going through the design to identify bottlenecks in the critical paths ? logic sections that couldn’t run at as high a frequency as NVIDIA would have liked ? and reworking them to operate at higher frequencies. As GPUs typically (and still are) relatively low clocked, there’s not as much of a need to optimize critical paths in this matter, but with NVIDIA’s loftier clockspeed goals for Pascal, this changed things.

From an implementation point of view this isn’t the first time that NVIDIA has pushed for high clockspeeds, as most recently the 40nm Fermi architecture incorporated a double-pumped shader clock. However this is the first time NVIDIA has attempted something similar since they reined in their power consumption with Kepler (and later Maxwell). Having learned their lesson the hard way with Fermi, I’m told a lot more care went into matters with Pascal in order to avoid the power penalties NVIDIA paid with Fermi, exemplified by things such as only adding flip-flops where truly necessary.

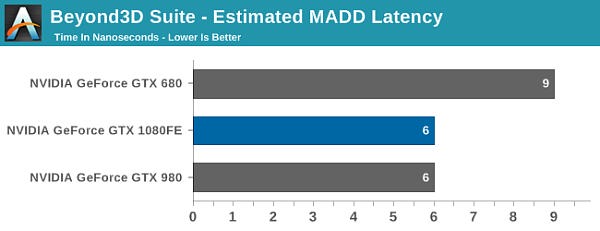

Meanwhile when it comes to the architectural impact of designing for high clockspeeds, the results seem minimal. While NVIDIA does not divulge full information on the pipeline of a CUDA core, all of the testing I’ve run indicates that the latency (in clock cycles) of the CUDA cores is identical to Maxwell. Which goes hand in hand with earlier observations about throughput. So although optimizations were made to the architecture to improve clockspeeds, it doesn’t look like NVIDIA has made any more extreme optimizations (e.g. pipeline lengthening) that detectably reduces Pascal’s per-clock performance.

Finally, more broadly speaking, while this is essentially a one-time trick for NVIDIA, it’s an interesting route for them to go. By cranking up their clockspeeds in this fashion, they avoid any real scale-out issues, at least for the time being. Although graphics are the traditional embarrassingly parallel problem, even a graphical workload is subject to some degree of diminishing returns as GPUs scale farther out. A larger number of SMs is more difficult to fill, not every aspect of the rendering process is massively parallel (shadow maps being a good example), and ever-increasing pixel shader lengths compound the problem. Admittedly NVIDIA’s not seeing significant scale-out issues quite yet, but this is why GTX 980 isn’t quite twice as fast as GTX 960, for example.

Just increasing the clockspeed, comparatively speaking, means that the entire GPU gets proportionally faster without shifting the resource balance; the CUDA cores are 43% faster, the geometry frontends are 43% faster, the ROPs are 43% faster, etc. The only real limitation in this regard isn’t the GPU itself, but whether you can adequately feed it. And this is where GDDR5X comes into play.

Feeding Pascal: GDDR5X

An ongoing problem for every generation of GPUs is the matter of memory bandwidth. As graphics is an embarrassingly parallel problem, it scales out with additional ALUs ? and consequently Moore’s Law ? relatively well. Each successive generation of GPUs are wider and higher clocked, consuming more data than ever before.

The problem for GPUs is that while their performance tracks Moore’s Law well, the same cannot be said for DRAM. To be sure, DRAM has gotten faster over the years as well, but it hasn’t improved at nearly the same pace as GPUs, and physical limitations ensure that this will continue to be the case. So with every generation, GPU vendors need to be craftier and craftier about how they get more memory bandwidth, and in turn how they use that memory bandwidth.

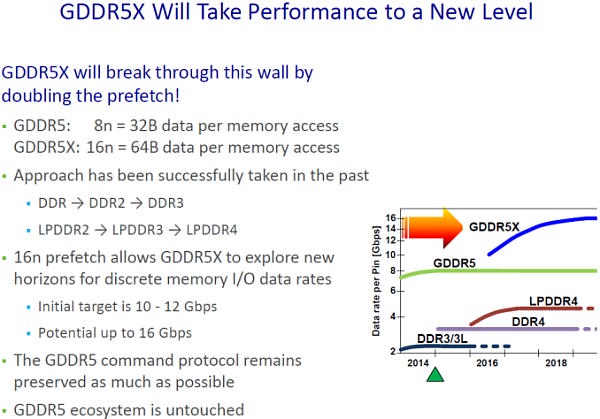

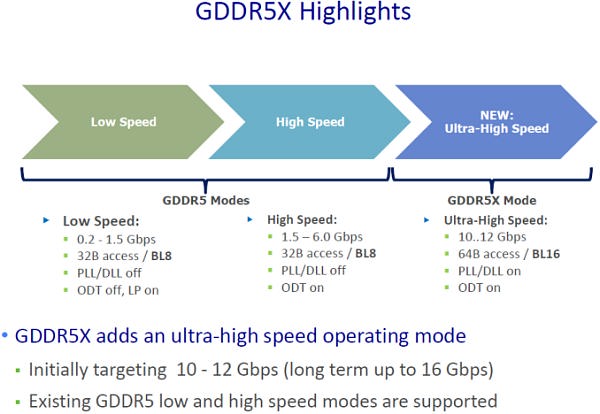

To help address this problem, Pascal brings to the table two new memory-centric features. The first of which is support for the newer GDDR5X memory standard, which looks to address the memory bandwidth problem from the supply side.

By this point GDDR5 has been with us for a surprisingly long period of time ? AMD first implemented it on the Radeon HD 4870 in 2008 ? and it has been taken to higher clockspeeds than originally intended. Today’s GeForce GTX 1070 and Radeon RX 480 cards ship with 8Gbps GDDR5, a faster transfer rate than the originally envisioned limit of 7Gbps. That GPU manufacturers and DRAM makers have been able to push GDDR5 so high is a testament to their abilities, but at the same time the technology is clearly reaching its apex (at least for reasonable levels of power consumption)

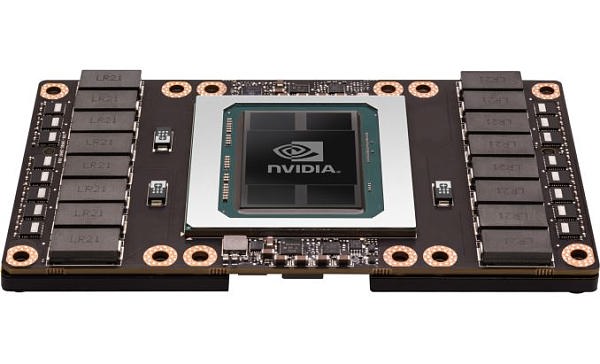

As a result there has been a great deal of interest in the memory technologies that would succeed GDDR5. At the high end, last year AMD became the first vendor to implement version 1 of High Bandwidth Memory, a technology that is a significant departure from traditional DRAM and uses an ultra-wide 4096-bit memory bus to provide enormous amounts of bandwidth. Not to be outdone, NVIDIA has adopted HBM2 for their HPC-centric GP100 GPU, using it to deliver 720GB/sec of bandwidth for Pascal P100.

While from a technical level HBM is truly fantastic next-generation technology ? it uses cutting edge technology throughput, from TSV die-stacking to silicon interposers that connect the DRAM stacks to the processor ? its downside is that all of this next-generation technology is still expensive to implement. Precise figures aren’t publicly available, but the silicon interposer is more expensive than a relatively simple PCB, and connecting DRAM dies through TSVs and stacking them is more complex than laying down BGA DRAM packages on a PCB. For NVIDIA, a more cost-effective solution was desired for GP104.

That solution came from Micron and the JEDEC in the form of GDDR5X. A sort of half-generation extension of traditional GDDR5, GDDR5X further increases the amount of memory bandwidth available from GDDR5 through a combination of a faster memory bus coupled with wider memory operations to read and write more data from DRAM per clock. And though it’s not without its own costs such as designing new memory controllers and boards that can accommodate the tighter requirements of the GDDR5X memory bus, GDDR5X offers a step in performance between the relatively cheap and slow GDDR5, and relatively fast and expensive HBM2.

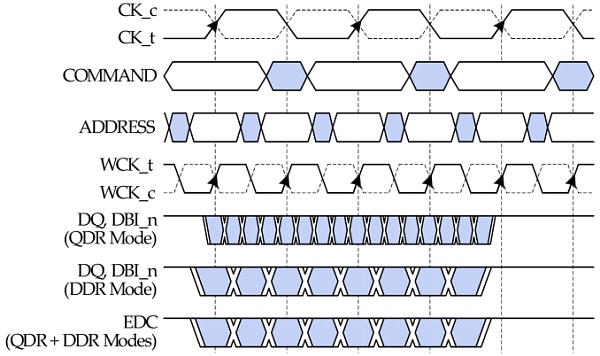

Relative to GDDR5, the significant breakthrough on GDDR5X is the implementation of Quad Data Rate (QDR) signaling on the memory bus. Whereas GDDR5’s memory bus would transfer data twice per write clock (WCK) via DDR, GDDR5X extends this to four transfers per clock. All other things held equal, this allows GDDR5X to transfer twice as much data per clock as GDDR5.

QDR itself is not a new innovation ? Intel implemented a quad pumped bus 15 years ago for the Pentium 4 with AGTL+ ? but this is the first time it has been implemented in a common JEDEC memory standard. The history of PC memory standards is itself quite a tale, and I suspect that the fact we’re only seeing a form of QDR now is related to patents. But regardless, here we are.

Going hand-in-hand with the improved transfer rate of the GDDR5X memory bus, GDDR5X also once again increases the size of read/write operations, as the core clockspeed of GDDR5X chips is only a fraction of the bus speed. GDDR5X uses a 16n prefetch, which is twice the size of GDDR5’s 8n prefetch. This translates to 64B reads/writes, meaning that GDDR5X memory chips are actually fetching (or writing) data in blocks of 64 bytes, and then transmitting it over multiple cycles of the memory bus. As discussed earlier, this change in the prefetch size is why the memory controller organization of GP104 is 8x32b instead of 4x64b like GM204, as each memory controller can now read and write 64B segments of data via a single memory channel.

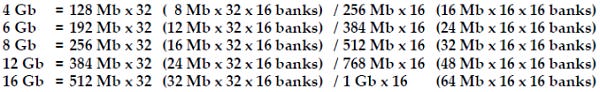

Overall GDDR5X is planned to offer enough bandwidth for at least the next couple of years. The current sole supplier of GDDR5X, Micron, is initially developing GDDR5X from 10 to 12Gbps, and the JEDEC has been talking about taking that to 14Gbps. Longer term, Micron thinks the technology can hit 16Gbps, which would be a true doubling of GDDR5’s current top speed of 8Gbps. With that said, even with a larger 384-bit memory bus (ala GM200) this would only slightly surpass what kind of bandwidth HBM2 offers today, reinforcing the fact that GDDR5X will fill the gap between traditional GDDR5 and HBM2.

Meanwhile when it comes to power consumption and power efficiency, GDDR5X will turn back the clock, at least a bit. Thanks in large part to a lower operating voltage of 1.35v, circuit design changes, and a smaller manufacturing node for the DRAM itself, 10Gbps GDDR5X only requires as much power as 7Gbps GDDR5. This means that relative to GTX 980, GTX 1080’s faster GDDR5X is essentially “free” from a power perspective, not consuming any more power than before, according to NVIDIA.

That said, while this gets NVIDIA more bandwidth for the same power ? 43% more, in fact ? NVIDIA has now put themselves back to where they were with GTX 980. GDDR5X can scale higher in frequency, but doing so will almost certainly further increase power consumption. As a result they are still going to have to carefully work around growing memory power consumption if they continue down the GDDR5X path for future, faster cards.

On a final specification note, GDDR5X also introduces non-power-of-two memory chip capacities such as 12Gb. These aren’t being used for GTX 1080 ? which uses 8Gb chips ? but I wouldn’t be surprised if we see these used down the line. The atypical sizing would allow NVIDIA to offer additional memory capacities without resorting to asymmetrical memory configurations as is currently the case, all the while avoiding the bandwidth limitations that can result from that.

Moving on to implementation details, GP104 brings with it a new memory controller design to support GDDR5X. As intended with the specification, this controller design is backwards compatible with traditional GDDR5, and will allow NVIDIA to support both memory standards. At this point NVIDIA hasn’t talked about what kinds of memory speeds their new controller can ultimately hit, but the cropped signal analysis diagram published in their slide deck shows a very tight eye. Given the fact that NVIDIA’s new memory controller can operate at 8Gbps in GDDR5 mode, I would be surprised if we don’t see at least 12Gbps GDDR5X by the tail-end of Pascal’s lifecycle.

But perhaps the bigger challenge is on the board side of matters, where NVIDIA and their partners needed to develop PCBs capable of handling the tighter signaling requirements of the GDDR5X memory bus. At this point video cards are moving 10Gbps/pin over a non-differential bus, which is itself a significant accomplishment. And keep in mind that in the long run, the JEDEC and Micron want to push this higher still.

To that end it somewhat undersells the whole process to just say that GDDR5X required “tighter signaling requirements”, but it’s an apt description. There is no single technology in place on the physical trace side to make this happen; it’s just a lot of precision, intensive work into ensuring that the traces and the junctions between the PCB, the chip, and the die all retain the required signal integrity. With a 256-bit wide bus we’re not looking at something too wide compared to the 384 and 512-bit buses uses on larger GPUs, so the task is somewhat simpler in that respect, but it’s still quite a bit of effort to minimize the crosstalk and other phenomena that degrade the signal, and which GDDR5X has little tolerance for.

As it stands I suspect we have not yet seen the full ramifications of the tighter bus requirements, and we probably won’t for cards that use the reference board or the memory design lifted from the reference board. For stability reasons, data buses are usually overengineered, and it’s likely the GDDR5X memory itself that’s holding back overclocking. Things will likely get more interesting if and when GDDR5X filters its way down to cheaper cards, where keeping costs in check and eking out higher margins becomes more important. Alternatively, as NVIDIA’s partners get more comfortable with the tech and its requirements, it’ll be interesting to see where we end up with the ultra-high-end overclocking cards ?the Kingpins, Lightnings, Matrices, etc ? and whether all of the major partners can keep up in that race.

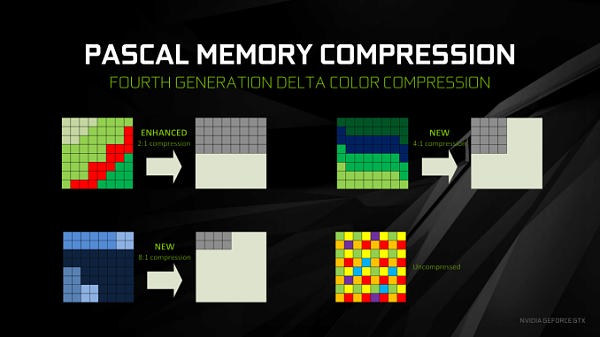

Feeding Pascal, Cont: 4th Gen Delta Color Compression

Now that we’ve seen GDDR5X in depth, let’s talk about the other half of the equation when it comes to feeding Pascal: delta color compression.

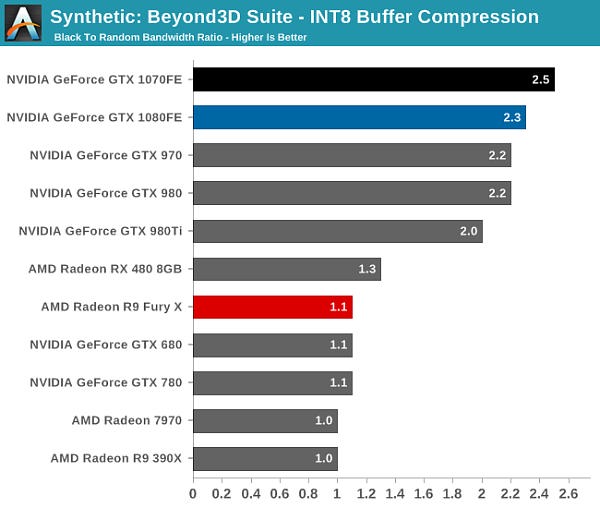

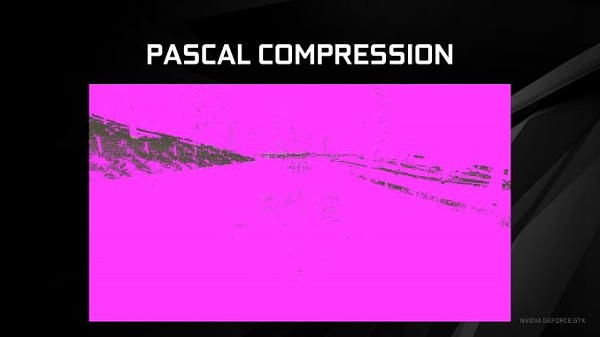

NVIDIA has utilized delta color compression for a number of years now. However the technology only came into greater prominence in the previous Maxwell 2 generation, when NVIDIA disclosed delta color compression’s existence and offered a basic overview of how it worked. As a reminder, delta color compression is a per-buffer/per-frame compression method that breaks down a frame into tiles, and then looks at the differences between neighboring pixels ? their deltas. By utilizing a large pattern library, NVIDIA is able to try different patterns to describe these deltas in as few pixels as possible, ultimately conserving bandwidth throughout the GPU, not only reducing DRAM bandwidth needs, but also L2 bandwidth needs and texture unit bandwidth needs (in the case of reading back a compressed render target).

Since its inception NVIDIA has continued to tweak and push the technology for greater compression and to catch patterns they missed on prior generations, and Pascal in that respect is no different. With Pascal we get the 4th generation of the technology, and while there’s nothing radical here compared to the 3rd generation, it’s another element of Pascal where there has been an iterative improvement on the technology.

New to Pascal is a mix of improved compression modes and new compression modes. 2:1 compression mode, the only delta compression mode available up through the 3rd generation, has been enhanced with the addition of more patterns to cover more scenarios, meaning NVIDIA is able to 2:1 compress blocks more often.

Meanwhile, new to delta color compression with Pascal is 4:1 and 8:1 compression modes, joining the aforementioned 2:1 mode. Unlike 2:1 mode, the higher compression modes are a little less straightforward, as there’s a bit more involved than simply the pattern of the pixels. 4:1 compression is in essence a special case of 2:1 compression, where NVIDIA can achieve better compression when the deltas between pixels are very small, allowing those differences to be described in fewer bits. 8:1 is more radical still; rather an operating on individual pixels, it operates on multiple 2×2 blocks. Specifically, after NVIDIA’s constant color compressor does its job ? finding 2×2 blocks of identical pixels and compressing them to a single sample ? the 8:1 delta mode then applies 2:1 delta compression to the already compressed blocks, achieving the titular 8:1 effective compression ratio.

Overall, delta color compression represents one of the interesting tradeoffs NVIDIA has to make in the GPU design process. The number of patterns is essentially a function of die space, so NVIDIA could always add more patterns, but would the memory bandwidth improvements be worth the real cost of die space and the power cost of those transistors? Especially since NVIDIA has already implemented the especially common patterns, which means new patterns likely won’t occur as frequently. NVIDIA of course pushed ahead here, thanks in part to the die and power savings of 16nm FinFET, but it gives us an idea of where they might (or might not) go in future generations in order to balance the costs and benefits of the technology, with less of an emphasis on patterns and instead making more novel use of those patterns.

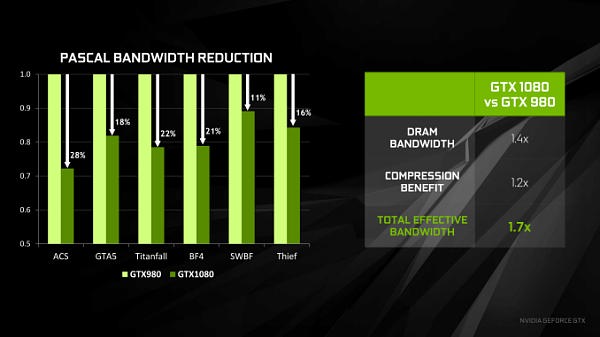

To put all of this in numbers, NVIDIA pegs the effective increase in memory bandwidth from delta color compression alone at 20%. The difference is of course per-game, as the effectiveness of the tech depends on how well a game sticks to patterns (and if you ever create a game with random noise, you may drive an engineer or two insane), but 20% is a baseline number for the average. Meanwhile for anyone keeping track of the numbers over Maxwell 2, this is a bit less than the gains with NVIDIA’s last generation architecture, where the company claimed the average gain was 25%.

The net impact then, as NVIDIA likes to promote it, is a 70% increase in the total effective memory bandwidth. This comes from the earlier 40% (technically 42.9%) actual memory bandwidth gains in the move from 7Gbps GDDR5 to 10Gbps GDDR5X, coupled with the 20% effective memory bandwidth increase from delta compression. Keep those values in mind, as we’re going to get back to them in a little bit.

Meanwhile from a graphical perspective, to showcase the impact of delta color compression, NVIDIA sent over a pair of screenshots for Project Cars, colored to show what pixels had been compressed. Shown in pink, even Maxwell can compress most of the frame, really only struggling with finer details such as the trees, the grass, and edges of buildings. Pascal, by comparison, gets most of this. Trees and buildings are all but eliminated as visually distinct uncompressed items, leaving only patches of grass and indistinct fringe elements. It should be noted that these screenshots have most likely been picked because they’re especially impressive ? seeing as how not all games compress this well ? but it’s none the less a potent example of how much of a frame Pascal can compress.

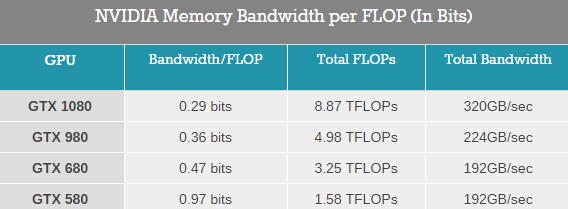

Finally, while we’re on the subject of compress, I want to talk a bit about memory bandwidth relative to other aspects of the GPU. While Pascal (in the form of GTX 1080) offers 43% more raw memory bandwidth than GTX 980 thanks to GDDR5X, it’s important to note just how quickly this memory bandwidth is consumed. Thanks to GTX 1080’s high clockspeeds, the raw throughput of the ROPs is coincidentally also 43% higher. Or we have the case of the CUDA cores, whose total throughput is 78% higher, shooting well past the raw memory bandwidth gains.

While it’s not a precise metric, the amount of bandwidth available per FLOP has continued to drop over the years with NVIDIA’s video cards. GTX 580 offered just short of 1 bit of memory bandwidth per FLOP, and by GTX 980 this was down to 0.36 bits/FLOP. GTX 1080 is lower still, now down to 0.29bits/FLOP thanks to the increase in both CUDA core count and frequency as afforded by the 16nm process.

The good news here is that at least for graphical tasks, the CUDA cores generally aren’t the biggest consumer of DRAM bandwidth. That would fall to the ROPs, which are packed alongside the L2 cache and memory controllers for this very reason. In that case GTX 1080’s bandwidth gains keep up with the ROP performance increase, but only by just enough.

The overall memory bandwidth needs of GP104 still outpace the memory bandwidth gains from GDDR5X, and this is why features such as delta color compression are so important to GP104’s performance. GP104 is perpetually memory bandwidth starved ? adding more memory bandwidth will improve performance, as we’ll see in our overclocking results ? and that means that NVIDIA will continue to try to conserve memory bandwidth usage as much as possible through compression and other means. How long they can fight this battle remains to be seen ? they already encounter diminishing returns in some cases ? but in the meantime this allows NVIDIA to utilize smaller memory buses, keeping down the die size and power costs of their GPUs, making PCB costs cheaper, and of course boosting profit margins at the same time.

Asynchronous Concurrent Compute: Pascal Gets More Flexible

Continuing our dive into the Pascal architecture, while Pascal did not make any fundamental execution changes to the CUDA cores, the same is not true for how work is allocated/scheduled on the CUDA cores. In fact, next to the addition of GDDR5X, I’d consider the changes to work scheduling to be the other great change to the overall Pascal core architecture. With Pascal, NVIDIA has significantly improved their ability to allocate and balance workloads, which in turn has ramifications in several difference scenarios. But for the AnandTech audience the greatest significance is going to be in what it means for work concurrency when using asynchronous compute.

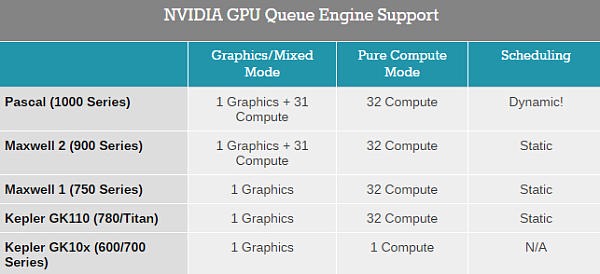

However to understand just what NVIDIA has done here, we’re going to have to first take a step back and try to unravel the ball of yarn that is asynchronous compute, concurrency, and load balancing on prior NVIDIA architectures. From a technical perspective, NVIDIA has slowly evolved their work queue execution abilities over time. Consumer Kepler (GK10x) could only handle a single work queue, while Big Kepler (GK110/GK210) added HyperQ, which introduced a 32 queue setup, but one that could only be used with pure compute workloads. For HPC users this was a big deal, but for consumer use cases there was no support for mixing HyperQ compute queues with a graphics queue.

This from a technical perspective is all that you need to offer a basic level of asynchronous compute support: expose multiple queues so that asynchronous jobs can be submitted. Past that, it’s up to the driver/hardware to handle the situation as it sees fit; true async execution is not guaranteed. Frustratingly then, NVIDIA never enabled true concurrency via asynchronous compute on Maxwell 2 GPUs. This despite stating that it was technically possible. For a while NVIDIA never did go into great detail as to why they were holding off, but it was always implied that this was for performance reasons, and that using async compute on Maxwell 2 would more likely than not reduce performance rather than improve it.Moving to Maxwell, Maxwell 1 was a repeat of Big Kepler, offering HyperQ without any way to mix it with graphics. It was only with Maxwell 2 that NVIDIA finally gained the ability to mix compute queues with graphics mode, allowing for the single graphics queue to be joined with up to 31 compute queues, for a total of 32 queues.

There’s a maxim in the consumer electronics industry that if you want to know what’s wrong with the current product, wait for the next one to be released. And in the case of the Pascal launch, this definitely ended up being true. Now that Pascal is upon us and NVIDIA has fixed that which ills Maxwell 2, we finally know why NVIDIA has held off from enabling concurrency with asynchronous compute on Maxwell 2 all this time.

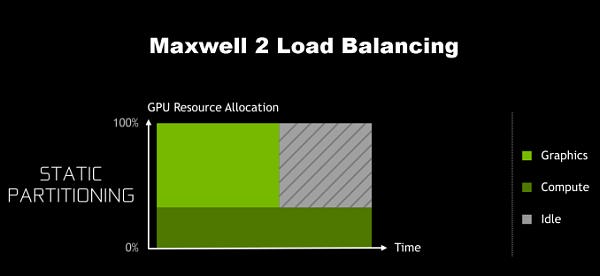

The issue, as it turns out, is that while Maxwell 2 supported a sufficient number of queues, how Maxwell 2 allocated work wasn’t very friendly for async concurrency. Under Maxwell 2 and earlier architectures, GPU resource allocation had to be decided ahead of execution. Maxwell 2 could vary how the SMs were partitioned between the graphics queue and the compute queues, but it couldn’t dynamically alter them on-the-fly. As a result, it was very easy on Maxwell 2 to hurt performance by partitioning poorly, leaving SM resources idle because they couldn’t be used by the other queues.

NVIDIA’s theoretical example involves when the graphics queue runs out of work before the compute queue, though in practice either one can happen, and either one would be similarly bad. There are a number of caveats in this example ? among other things, this assumes that other new work can’t be started until both queues are finished ? so please don’t consider this a catch-all for how concurrency under asynchronous compute works, but it covers the most basic and common case where a compute workload is closely tied to a graphics workload.

Meanwhile not shown in these simple graphical examples is that for async’s concurrent execution abilities to be beneficial at all, there needs to be idle time bubbles to begin with. Throwing compute into the mix doesn’t accomplish anything if the graphics queue can sufficiently saturate the entire GPU. As a result, making async concurrency work on Maxwell 2 is a tall order at best, as you first needed execution bubbles to fill, and even then you’d need to almost perfectly determine your partitions ahead of time.

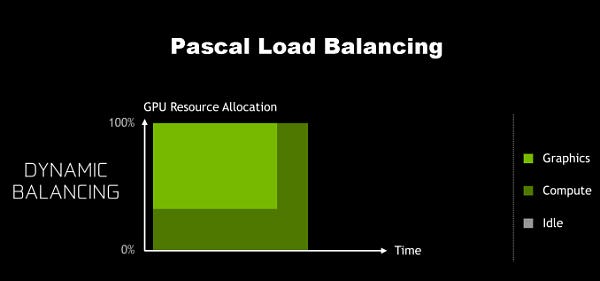

Getting back to Pascal then, Pascal finally fixes the resource allocation issue. For Pascal, NVIDIA has implemented a dynamic load balancing system to replace Maxwell 2’s static partitions. Now if the queues end up unbalanced and one of the queues runs out of work early, the driver and work schedulers can step in and fill up the remaining time with work from the other queues.

In concept it sounds simple, and in practice it should make a large difference to how beneficial async compute can be on NVIDIA’s architectures. Adding more work to create concurrency to fill execution bubbles only works if the queue scheduling itself doesn’t create bubbles, and this was Maxwell 2’s Achilles’ heel that Pascal has addressed.

At the same time however I feel it’s important to note that the scheduling change alone won’t (and can’t) guarantee that Pascal will see significant gains from async compute across the board. Async compute itself is a catch-all term ? there are lots of things you can do with asynchronous work submission/execution ? so async doesn’t mean that a game is making significant use of concurrency. Furthermore the concurrency is still based on filling execution bubbles, and that means that there needs to be bubbles to fill in the first place. In other words, the greatest gains from async will come from scenarios where for whatever reason, the graphics queue and its synchronous shaders can’t completely saturate the GPU on its own.

Right now I think it’s going to prove significant that while NVIDIA introduced dynamic scheduling in Pascal, they also didn’t make the architecture significantly wider than Maxwell 2. As we discussed earlier in how Pascal has been optimized, it’s a slightly wider but mostly higher clocked successor to Maxwell 2. As a result there’s not too much additional parallelism needed to fill out GP104; relative to GM204, you only need 25% more threads, a relatively small jump for a generation. This means that while NVIDIA has made Pascal far more accommodating to asynchronous concurrent executeion, there’s still no guarantee that any specific game will find bubbles to fill. Thus far there’s little evidence to indicate that NVIDIA’s been struggling to fill out their GPUs with Maxwell 2, and with Pascal only being a bit wider, it may not behave much differently in that regard.

Meanwhile, because this is a question that I’m frequently asked, I will make a very high level comparison to AMD. Ever since the transition to unified shader architectures, AMD has always favored higher ALU counts; Fiji had more ALUs than GM200, mainstream Polaris 10 has nearly as many ALUs as high-end GP104, etc. All other things held equal, this means there are more chances for execution bubbles in AMD’s architectures, and consequently more opportunities to exploit concurrency via async compute. We’re still very early into the Pascal era ? the first game supporting async on Pascal, Rise of the Tomb Raider, was just patched in last week ? but on the whole I don’t expect NVIDIA to benefit from async by as much as we’ve seen AMD benefit. At least not with well-written code.

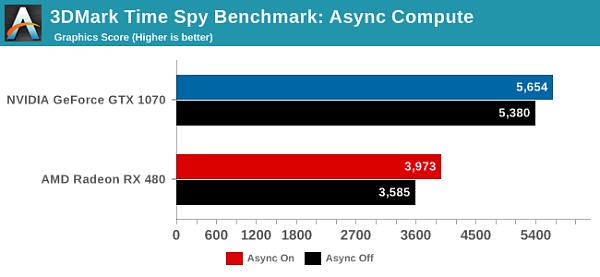

Otherwise, for the time being, the one good benchmark we have here is 3DMark Time Spy, which was released last week. The ground up DirectX 12 benchmark attempts to heavily overlap rendering passes to fill those aforementioned execution bubbles.

Taking a quick run of the benchmark, on a relative basis we see a 10.8% gain from using async compute plus concurrency for the RX 480, and a 5.4% gain for the GTX 1070. This is but one benchmark (and technically not even a game at that), but for what it’s worth this is the kind of trend I’m expecting to see in future games as they get better about exploiting workload concurrency via async compute.

Finally, getting back to the subject of dynamic scheduling, I’ve spent some time mulling over what’s probably the obvious question: if dynamic scheduling is so great, why didn’t NVIDIA do this sooner? It’s not a question I have an answer to, but I strongly suspect it’s another one of those tradeoffs that’s rooted in balancing costs and benefits. Dynamic scheduling requires a greater management of hazards that simply weren’t an issue with static scheduling, as now you need to handle everything involved with suddenly switching an SM to a different queue. Meanwhile NVIDIA more than likely paid a die space penalty for implementing dynamic scheduling. GPUs continually sit on the fence between being an ultra-fast staticly scheduled array of ALUs and an ultra-flexible somewhat smaller array of ALUs, and GPU vendors get to sit in the middle trying to figure out which side to lean towards in order to deliver the best performance for workloads that are 2-5 years down the line. It is, if you’ll pardon the pun, a careful balancing act for everyone involved.

Preemption Improved: Fine-Grained Preemption for Time-Critical Tasks

Continuing our discussion thus far on asynchronous compute and concurrency, the Pascal architecture includes another major feature update related to how work is scheduled. For those of you who have caught our earlier coverage of NVIDIA’s Pascal P100 accelerator, then you should already have an idea of what this is, as NVIDIA touted it as an HPC feature as well. I am of course speaking about fine-grained preemption.

Before we start, in writing this article I spent some time mulling over how to best approach the subject of fine-grained preemption, and ultimately I’m choosing to pursue it on its own page, and not on the same page as concurrency. Why? Well although it is an async compute feature ? and it’s a good way to get time-critical independent tasks started right away ? its purpose isn’t to improve concurrency.

Asynchronous compute is in a sense a catch-all term, as the asynchronous execution of tasks has a number of different uses. But for consumer products, it’s important to make a distinction between those features that improve concurrency and allow a GPU to get more work done ? which is the best understood feature of asynchronous compute ? and other features that make more novel use of async. Fine-grained preemption is distinctly in the latter category.

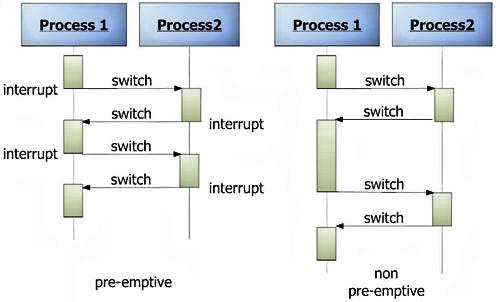

So what is preemption then? In a nutshell, it’s the ability to interrupt an active task (context switch) on a processor and replace it with another task, with the further ability to later resume where you left off. Historically this is a concept that’s more important for CPUs than GPUs, especially back in the age of single core CPUs, as preemption was part of how single core CPUs managed to multitask in a responsive manner. GPUs, for their part, have supported context switching and basic preemption as well for quite some time, however until the last few years it has not been a priority, as GPUs are meant to maximize throughput in part by rarely switching tasks.

(Robert Berger/EE Times: Getting real (time) about embedded GNU/Linux)

Preemption in turn is defined by granularity; how quickly a processor can actually context switch. This ranges from coarse grained-context switching, which is essentially only being able to context switch at certain points in execution, out to fine-grained context switching that allows for an almost immediate switch at any point in time. What’s new for Pascal then is that preemptive context switching just got a lot finer grained, especially for compute.

Diving deeper, to understand what NVIDIA has done, let’s talk about prior generation architectures. Previously, with Maxwell 2 and older architectures, NVIDIA only offered very coarse grained preemption. For graphics tasks or mixed graphics/compute tasks, NVIDIA could only preempt at the boundary of draw calls ? collections of triangles and other draw commands, potentially encompassing dozens of polygons and hundreds (if not thousands) of pixels and threads.

The great thing about preempting at a draw call boundary is that it’s relatively clean, as draw calls are a very large and isolated unit of work. The problem with preempting at a draw call boundary is that draw calls are a very large unit of work; just because you call for the preemption doesn’t mean you’re going to get it any time soon. This analogy extends over to the compute side as well, as whole blocks of threads needed to be completed before a preemption could take place.

In fact it’s probably the compute side that’s a better real-world example of the problem with coarse-grained preemption. If you’ve ever run a GPU compute program and had your display driver reboot due to Timeout Detection and Recovery (TDR), then you’ve experienced coarse-grained preemption. For end-user responsiveness and quality purposes, Windows will reset a GPU if it doesn’t respond for more than around 2 seconds. With coarse-grained preemption, it is possible to schedule a compute task that takes so long to run that a block can’t complete in time for the Windows check-in, triggering the TDR reset. Consequently, there is good reason to support finer grained preemption for both graphics and compute tasks.

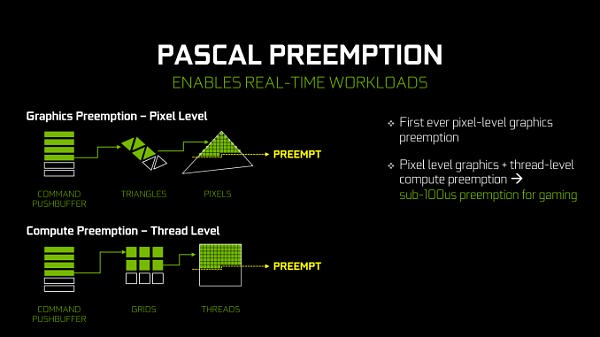

This in turn is where Pascal steps in. Along with the aforementioned improvements to how Pascal can fill up its execution pipelines, Pascal also implements a radically improved preemption ability. Depending on whether it’s a graphics or a pure compute task, Pascal can now preempt at the thread level or even the instruction level respectively.

Starting with the case of a graphics task or a mixed graphics + compute task, Pascal can now interrupt at the thread level. For a compute workload this is fairly self-explanatory. Meanwhile for a graphics workload the idea is very similar. Though we’re accustomed to working with pixels as the fundamental unit in a graphics workload, under the hood the pixel is just another thread. As a result the ability to preempt at a thread has very similar consequences for both a graphics workload and the compute threads mixed in with a graphics workload.

With Maxwell 2 and earlier architectures, the GPU would need to complete the whole draw call before preempting. However now with Pascal it can preempt at the pixel level within a triangle, within a draw call. When a preemption request is received, Pascal will stop rasterizing new pixels, let the currently rastered pixels finish going through the CUDA cores, and finally initiate the context switch once the above is done. NVIDIA likes to call this “Pixel Level Preemption.”

In terms of absolute time the benefit of course varies from game to game, and also a bit of luck depending on where in the draw call you are when the preemption request is made. But in general, draw call size and complexity has been going up over the years due to a combination of CPU limits (draw calls are expensive under DX11/OpenGL, which is why we have DX12/Vulkan) and the fact that pixel shaders continue to get longer, consequently taking more time for a given pixel/thread to fully execute. But in the end, the result is that Pascal can now execute a preemptive context switch for graphics much more rapidly than Maxwell 2 could.

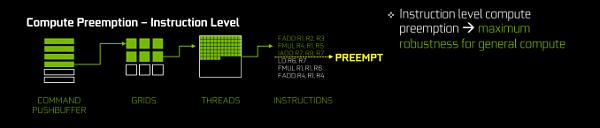

Meanwhile in a pure compute scenario (i.e. running a CUDA program) Pascal takes things one step further. Not satisfied with preempting at the thread level, Pascal can actually preempt at the lowest level of them all, the instruction level. This means preempting a thread mid-flow, before the next instruction begins. The thread doesn’t even need to reach completion; with instruction level preemption, the thread can be pulled virtually immediately, Vaudeville Hook style.

This kind of fine-grained preemption is the most powerful, but it’s also primarily on the GPU for compute purposes. The greatest significance to consumers is allowing CUDA compute applications to run without triggering the TDR watchdog, as now they can stop on the spot and let the watchdog complete its work. This, not-so-coincidentally, has been one of Microsoft’s long-term goals with WDDM development, as they’ve been pushing GPU vendors to support finer-grained preemption for this very reason. Though more broadly speaking, NVIDIA’s plans are greater than just WDDM and the TDR watchdog, such as allowing functionality like interactive debugging of CUDA programs on a single-GPU system (previously they were greater limitations and you’d often need multiple GPUs).

The trade-off for finer-grained preemption is that the deeper you go ? the more “in the middle of things” you allow the interruption ? the more work it is to context switch. Preempting at the draw call level involves very little state information, preempting at the thread level involves a fair bit of state, and preempting at the instruction level involves a massive amount of state, including the full contents of the L1 caches and the register files. In the case of the latter you’re looking at a minimum of several megabytes of state information that have to be recorded and sent to VRAM for storage. So finer-grained preemption is a very big deal at the implementation level, requiring new hardware to load and save states quickly, and then get it off to VRAM without skipping a beat.

The actual time cost for preemption varies with the workload, but at the most basic level, when the GPU is ready to execute the context switch, NVIDIA tells us that it can be done in under 100us (0.1ms), or about 170,000 clock cycles. Relative to the GPU this is not an insignificant amount of time, and while it’s much faster than the total context switch time from Maxwell 2, it does mean that context switching is still a somewhat expensive operation (roughly 50-100x more so than on a modern Intel CPU). So context switching still needs to be used intelligently, and, for best performance, infrequently.

Meanwhile I’ll quickly note that while the current Pascal drivers only implement thread/pixel level preemption for graphics and mixed workloads, as you might suspect from Pascal’s instruction level preemption capabilities for compute, this is something of an arbitrary decision. Because instruction level preemption is a fundamental Pascal ability, it could be made to do so for graphics as well as compute. The reason why NVIDIA has gone this route is one of cost/benefit tradeoffs; the DirectX team doesn’t believe that preemption at this level is necessary for graphics, in part because pixel shaders aren’t prone to long loops like dedicated compute kernels are, so the next thread boundary will come up relatively quickly. And preempting at the thread boundary is easier (i.e. there’s less state) than at the instruction level. This is something that NVIDIA could theoretically change course on in the future, but at least for now the belief is that the need/demand for such fine-grained preemption in a graphics context isn’t there.

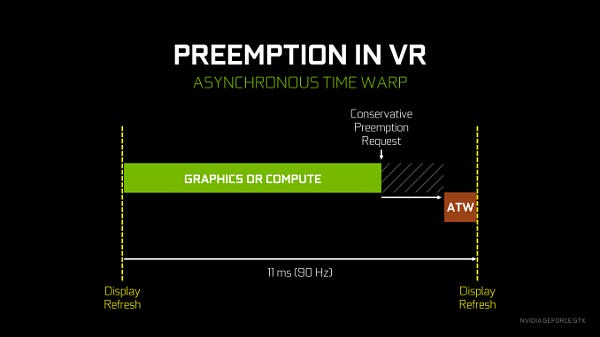

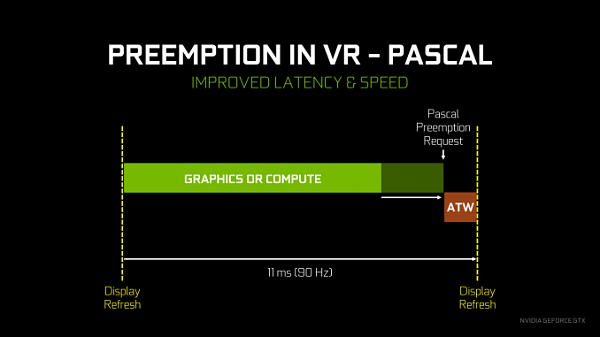

Finally, speaking of graphics, let’s talk about what pixel/thread level preemption is going to be used for. Overall there aren’t too many cases where you want to context switch in the middle of a graphics task, but there is one case that NVIDIA considers very important: asynchronous time warp. We’ve covered asynchronous time warp before, so I won’t fully rehash it here, but in short it’s a neat hack for VR where the frame being prepared for a headset is warped at the last possible moment, using up-to-the-millisecond positioning data, in order to give the illusion that the frame is newer than it actually is. The benefit is that it reduces the perceived input lag of VR, which is one of the causes of simulator sickness.

NVIDIA has supported async time warp since Oculus enabled the feature in their SDK, however given the above limitations of Maxwell 2, the architecture wasn’t able to execute an async time warp very efficiently. Assuming there weren’t any free SMs to work on a time warp right away, the need to wait until the end of a draw call meant that it could potentially be a relatively large amount of time until the context switch took place. This required the software stack to be relatively conservative with the preemption call, making the call early in order to ensure that the time warp could execute before it’s too late.

With Pascal’s much improved preemption capabilities, this significantly changes how conservative async time warp needs to be. Pascal’s finer-grained preemption means that the preemption request can come much later. The benefit is that this both better ensures that the async time warp actually occurs in time ? there’s virtually no chance of a thread taking too long to finish ? and it means that much less GPU time is wasted from the GPU idling due to the conservative preemption request. The end result is that the performance impact of async time warp is reduced on Pascal as compared to Maxwell 2, allowing the GPU to spend more time in every refresh interval doing productive work.

All of that said, I don’t have a good feel for the benefit in terms of numbers; like so many other cases it’s going to depend on the specific game in use, and for that matter how well the game is saturating the GPU given the fixed 90Hz update interval. And though this puts Maxwell 2 in a lesser light, at least so far I haven’t personally noticed Maxwell 2 struggling to execute an async time warp. That Maxwell 2 does as well as it does is likely a testament to the viability of conservative preemption requests, which is to say that it will be interesting if we ever get a chance to see just how much performance Maxwell 2 had to leave on the table to reliably execute async time warps.

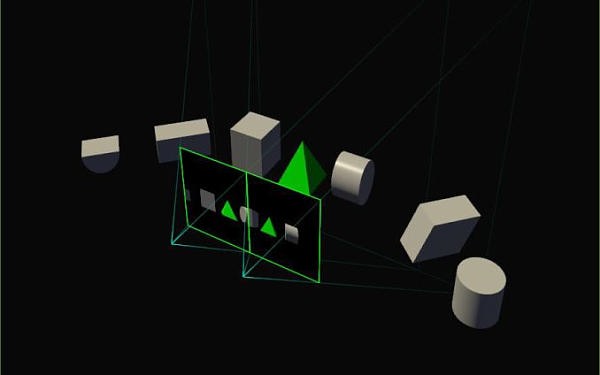

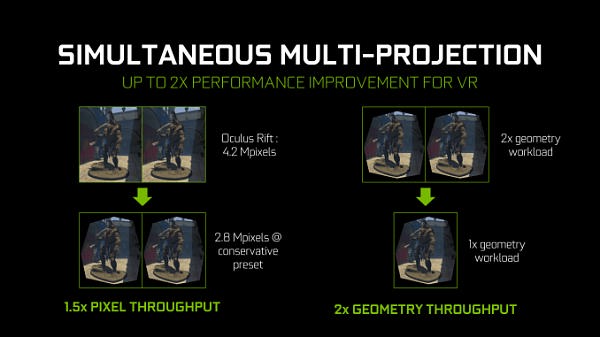

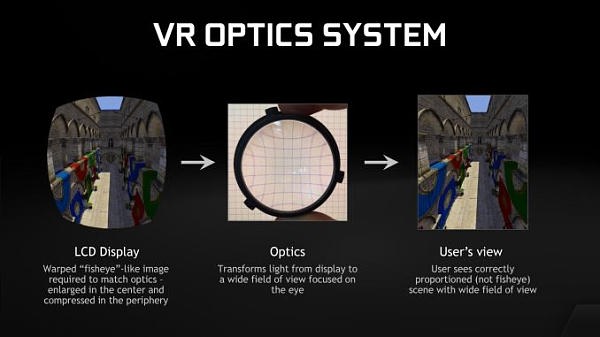

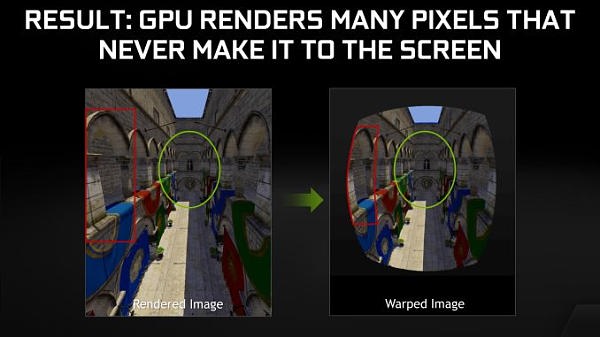

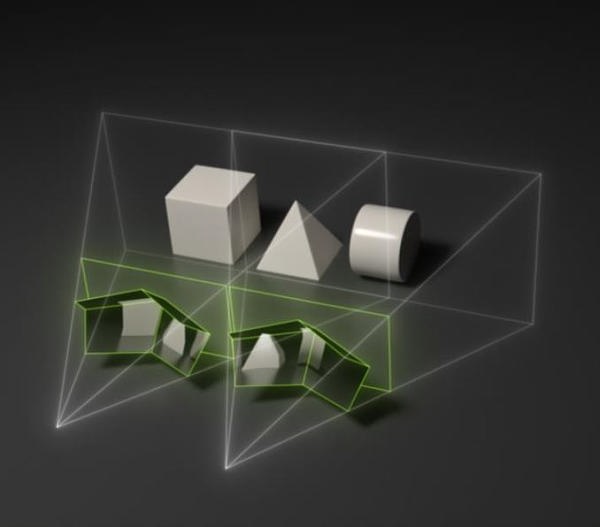

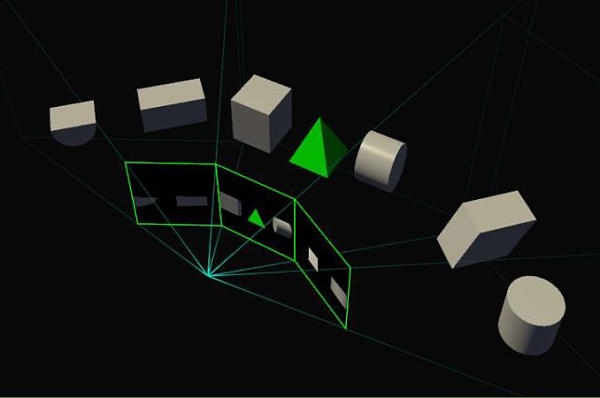

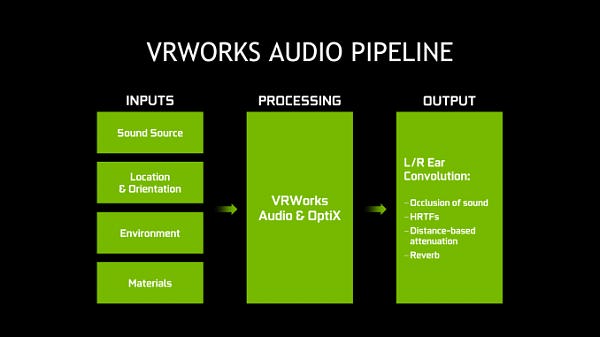

Simultaneous Multi-Projection: Reusing Geometry on the Cheap